Pandas for Data Analysis: How to get Data from APIs

Requirements

We need “requests” and “pandas” packages to pull data from APIs sources. The requests package will be used to make API request while pandas is used to create Data Frame.

On our Jupiter notebook, we add the following imports

To test the aforementioned imports and how it pulls data from API source, we will request data from the USGS API. These are data recorded every day that relates to earthquake. We will pull data for the last 30 days up to yesterday. As such, we’ll need datetime package. Thus, all imports are as follows:

Now that we’ve got all the imports ready, we can start pulling data from USGS API. With requests package, we will be able to make GET request to the USGSAPI and obtain JSON payload. The data we are trying to obtain were recorded in the last 30 days beginning from yesterday. Because we need data beginning from yesterday, we have to specify that in the code that tries to retrieve data – this can be done with the help of timedelta from datetime package. Here is the code:

Now that we have the payload and api ready, we can pass the two objects to GET HTTP method to inform the server what we want.

Before creating the DataFrame from response object obtained from the server, we have to check the response to confirm if the server agrees to give use information. This can be done with “status_code” attribute as follows;

A 200-response seen above shows that the server (USGSAPI) has agreed to give us the information we have requested.

Now, it’s time to check the data obtained – the data is obtained in JSON format because that’s what we asked for it (‘format’: ‘geojson’,). It comes formatted in a dictionary. Python dictionary data structure provides a ton of methods that can be used to check data.

Obtain JSON Payload

We can isolated data from the JSON response as follows:

The earthq_json above is a dictionary that carries data. We can confirm the type and check the keys using dictionary function "keys":

For all the keys in the list shown above, we can inspect each one to confirm the data they have. Based on my analysis, 'features' is likely to carry the data we need. Let's check check the type using type function:

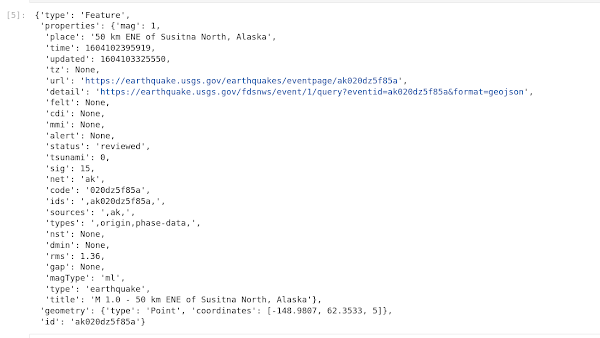

As seen above, 'features' key contains a list of information. We can pick a small section of data using index.

We are almost done. From the screenshot above, need to pick the properties section out of every entry in the features JSON array to create our dataframe. This can only be achieved using List Comprehension.

Finally, we can create a dataframe using using what we have obtained above

We can inspect the first 3 rows of the dataframe generated using dataframe head function as follows:

Comments

Post a Comment